A Model Context Protocol (MCP) server is a specialized service that acts as a bridge between a large language model (LLM)–driven AI application and external tools, data sources, or workflows. Conceptually, an MCP server “provides context” to the AI by executing requests on the model’s behalf. When an AI agent needs data from a database or must call an external API, it sends a structured request via the MCP protocol to the MCP server. The server connects to the appropriate system, performs the action or query, and returns the results in a standardized format the AI can understand.

An MCP server can be thought of as a universal connector for AI systems. It standardizes how AI applications interact with the outside world, eliminating the need for custom, brittle integrations for every new tool or data source. This standardization is critical as AI systems scale and need to interact with many internal and external systems.

Why MCP Servers Are Needed

On their own, large language models are isolated systems. They generate responses based on training data and prompt context but cannot directly access live databases, internal tools, or enterprise systems. Without MCP, every integration between an AI model and a system must be built manually, leading to duplicated effort, maintenance overhead, and tight coupling between models and tools.

MCP servers solve this by creating a clean separation of concerns. The AI focuses on reasoning and decision-making, while the MCP server handles data access and actions. This turns AI from a static text generator into an operational system capable of interacting with real-world infrastructure.

How MCP Servers Work (Architectural View)

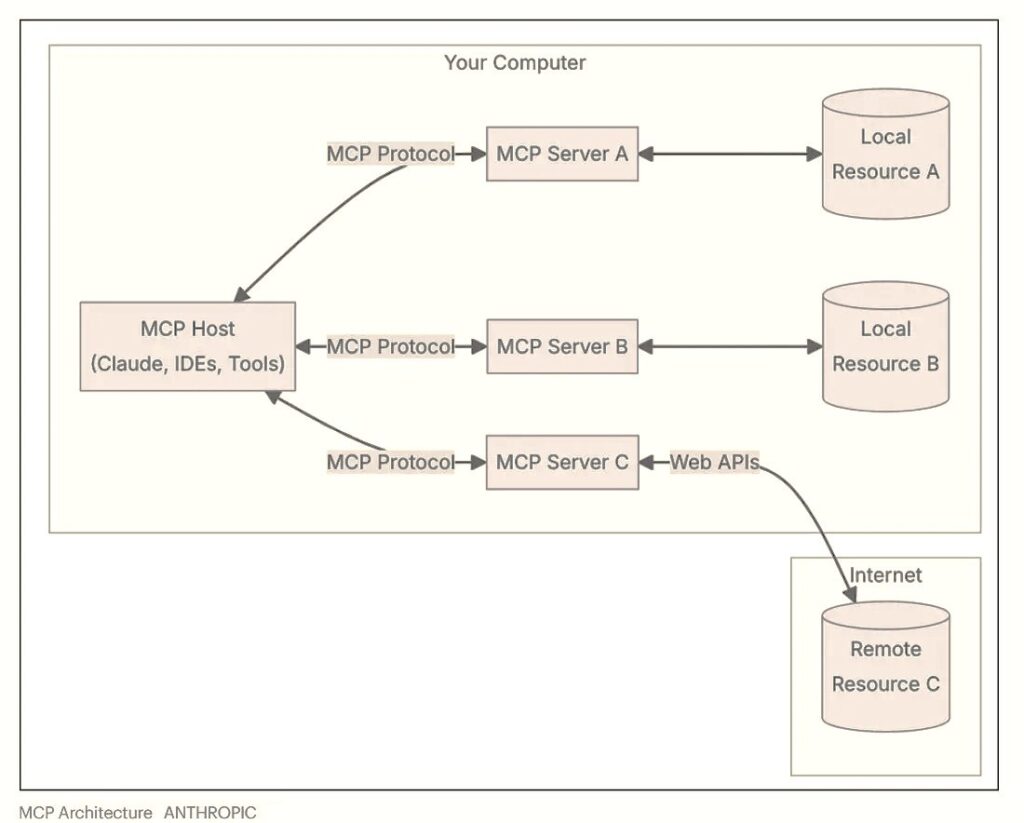

At an architectural level, MCP follows a client–server model. The AI application acts as the host and contains the language model. Within the host, MCP clients manage connections to MCP servers. Each MCP server exposes a specific set of capabilities, such as querying a database, reading files, or triggering workflows.

When the AI determines that it needs external information or needs to perform an action, it issues a structured request through the MCP client. The MCP server receives the request, executes the corresponding operation against the underlying system, and returns the result. The AI then incorporates this result into its reasoning and response.

This design ensures that AI systems do not need to understand the internal details of every tool they use. They only need to know how to request capabilities via the protocol.

What an MCP Server Looks Like Conceptually

An MCP server typically includes:

– A protocol interface that accepts structured requests from AI clients

– A capability layer defining available tools, resources, or actions

– Connectors to underlying systems such as databases, APIs, file systems, or services

– Security and access controls to ensure safe and scoped usage

The server may run locally for low-latency or privacy-sensitive use cases, or remotely to support many AI clients at scale.

Advantages of MCP Servers

- Standardization and Reuse

MCP servers eliminate one-off integrations. A capability exposed once can be reused across many AI agents and applications. - Modularity and Composability

Each MCP server focuses on a specific domain. Multiple servers can be combined to give AI systems broad functionality without tight coupling. - Improved Context and Accuracy

By providing live, authoritative data, MCP servers reduce hallucinations and improve response quality. - Faster Integration and Iteration

New tools can be added by deploying a server rather than rewriting AI logic. - Scalability

MCP servers can support single-user assistants or enterprise-scale deployments. - Security and Isolation

AI systems only access what is explicitly exposed. Sensitive systems remain protected behind controlled interfaces.

Real-World Applications

- Enterprise AI Assistants

An AI assistant can use MCP servers to query internal databases, retrieve customer records, or generate reports using live data. - Customer Support Automation

AI agents can check order status, create tickets, or trigger refunds through MCP servers connected to operational systems. - Developer Productivity Tools

AI copilots can analyze repositories, inspect logs, and assist with debugging using MCP servers that expose development tools. - Document and Knowledge Management

AI systems can search, summarize, and analyze documents via file system or document store MCP servers. - Workflow Automation

MCP servers allow AI to orchestrate multi-step workflows across systems such as scheduling, messaging, and task management.

This diagram shows how the Anthropic AI delegates real-world interactions to the MCP server while remaining decoupled from system-specific logic.

Conclusion

MCP servers are foundational infrastructure for modern AI systems. They transform AI from a conversational interface into an operational layer capable of interacting with real systems. By standardizing how AI connects to tools and data, MCP servers enable scalable, secure, and maintainable AI architectures. As AI adoption grows, MCP servers provide the architectural backbone that allows intelligence to translate into real-world action.